1 Introduction

A citation network is an important source of analysis in science. Citations serve multiple purposes such as crediting an idea, signaling knowledge of the literature, or critiquing others’ work (Martyn, 1975). When citations are thought of as impact, they inform tenure, promotion, and hiring decisions (Meho & Yang, 2007). Furthermore, scientists themselves make decisions based on citations, such as which papers to read and which articles to cite. Citation practices and infrastructures are well-developed for journal articles and conference proceedings. However, there is much less development for dataset citation. This gap affects the increasingly important role that datasets play in scientific reproducibility (Belter, 2014; On Data Citation Standards & Practices, 2013; Park, You, & Wolfram, 2018; Robinson-García, Jiménez-Contreras, & Torres-Salinas, 2016), where studies use them to confirm or extend the results of other research (Darby et al., 2012; Sieber & Trumbo, 1995). One historical cause of this gap is the difficulty in archiving datasets. While less problematic today, the citation practices for datasets take time to develop. Better algorithmic approaches to track dataset usage could improve this state. In this work, we hypothesize that a network flow algorithm could track usage more effectively if it propagates publication and dataset citations differently. With the implementation of this algorithm, then, it will be possible to correct differences in citation behavior between these two types of artifacts, increasing the importance of datasets as first class citizens of science.

In this article, we develop a method for assigning credit to datasets from citation networks of publications, assuming that dataset citations have biases. Importantly, our method does not modify the source data for the algorithms. The method does not rely on scientists explicitly citing datasets but infers their usage. We adapt the network flow algorithm of Walker, Xie, Yan, and Maslov (2007) by including two types of nodes: datasets and publications. Our method simulates a random walker that takes into account the differences between obsolescence rates of publications and datasets, and estimates the score of each dataset—the DataRank. We use the metadata from the National Center for Bioinformatics (NCBI) GenBank nucleic acid sequence and Figshare datasets to validate our method. We estimate the relative rank of the datasets with the DataRank algorithm and cross-validate it by predicting the actual usage of them—number of visits to the NCBI dataset web pages and downloads of Figshare datasets. We show that our method is better at predicting both types of usages compared to citations and has other qualitative advantages compared to alternatives. We discuss interpretations of our results and implications for data citation infrastructures and future work.

2 Why measure dataset impact?

Scientists may be incentivized to adopt better and broader data sharing behaviors if they, their peers, and institutions are able to measure the impact of datasets (e.g., see Kidwell et al., 2016; Silvello, 2018). In this context, we review impact assess- ment conceptual frameworks and studies of usage statistics and crediting of scientific works more specifically. These areas of study aim to develop methods for scientific indicators of the usage and impact of scholarly outputs. Impact assessment research also derives empirical insights from research products by assessing the dynamics and structures of connections between the outputs. These connections can inform better policy-making for research data management, cyberinfrastructure implementation, and funding allocation.

Methods for measuring usage and impact include a variety of different dimensions of impact, from social media to code use and institutional metrics. Several of these approaches recognize the artificial distinction between the scientific process and product (Priem, 2013). For example, altmetrics is one way to measure engagement with diverse research products and to estimate the impact of non-traditional outputs (Priem, 2014). Researchers predict that it will soon become a part of the citation infrastructure to routinely track and value “citations to an online lab notebook, contributions to a software library, bookmarks to datasets from content-sharing sites such as Pinterest and Delicious” (from Priem, 2014). In short, if science has made a difference, it will show up in a multiplicity of places. As such, a correspondingly wider range of metrics are needed to attribute credit to the many locations where research works reflect their value. For example, datasets contribute to thousands of papers in NCBI’s Gene Expression Omnibus and these attributions will continue to accumulate, just like paper accumulate citations, for a number of years after the datasets are publicly released (Piwowar, 2013; Piwowar, Vision, & Whitlock, 2011). Efforts to track these other sources of impact include ImpactStory, statistics from FigShare, and Altmetric.com (Robinson-Garcia, Mongeon, Jeng, & Costas, 2017).

Credit attribution efforts include those by federal agencies to expand the definition of scientific works that are not pub- lications. For example, in 2013 the National Science Foundation (NSF) recognized the importance of measuring scientific artifacts other than publications by asking researchers for “products” rather than just publications. This represents a signifi- cant change in how scientists are evaluated (Piwowar, 2013). Datasets, software, and other non-traditional scientific works are now considered by the NSF as legitimate contributions to the publication record. Furthermore, real-time science is pre- sented in several online mediums; algorithms filter, rank, and disseminate scholarship as it happens. In sum, the traditional journal article is increasingly being complemented by other scientific products (Priem, 2013).

Yet despite the crucial role of data in scientific discovery and innovation, datasets do not get enough credit (Silvello, 2018). If credit was properly accrued, researchers and funding agencies would use this credit to track and justify work and funding to support datasets—consider the recent Rich Context Competition which aimed at to filling this gap by detecting dataset mentions in full-text papers (Zeng & Acuna, 2020). Because these dataset mentions are not tracked by current citation networks, this leads to biases in dataset citations (Robinson-García et al., 2016). The FAIR (findable, accessible, interoperable, reproducible) principles of open research data are one major initiative that is spearheading better practices with tracking digital assets such as datasets (Wilkinson et al., 2016). However, the initiative is theoretical, and lacks technical implementation for data usage and impact assessment. There remains a need to establish methods to better estimate dataset usage.

3 Materials and methods

3.1 Datasets

3.1.1 OpenCitations Index (COCI)

The OpenCitations index (COCI) is an index of Crossref’s open DOI-to-DOI citation data. We obtained a snapshot of COCI (November 2018 version), which contains approximately 450 million DOI-to-DOI citations. Specifically, COCI contains information including citing DOI, cited DOI, the publication date of citing DOI. The algorithm we proposed in the paper requires the publication year. However, not all the DOIs in COCI have a publication date. We will introduce Microsoft Academic Graph to fill this gap.

3.1.2 Microsoft Academic Graph (MAG)

The Microsoft Academic Graph is a large heterogeneous graph consisting of six types of entities: paper, author, institution, venue, event, and field of study (Sinha et al., 2015). Concretely, the description of a paper consists of DOI, title, abstract, published year, among other fields. We downloaded a copy of MAG in November 2019, which contains 208,915,369 papers. As a supplement to COCI, we extract the DOI and published year from MAG to extend those DOIs in COCI without a publication date.

3.1.3 PMC Open Access Subset (PMC-OAS)

The PubMed Central Open Access Subset is a collection of full-text open access journal articles in biomedical and life sciences. We obtained a snapshot of PMC-OAS in August 2019. It consists of about 2.5 million full-text articles organized in well-structured XML files. The articles are identified by a unique id called PMID. We also obtained a mapping between PMIDs and DOIs from NCBI, which enabled us to integrate PMC-OAS into the citation network.

3.1.4 GenBank

GenBank is a genetic sequence database that contains an annotated collection of all publicly available nucleotide sequences for almost 420,000 formally described species (Sayers et al., 2019). We obtained a snapshot of the GenBank database (version 230) with 212,260,377 gene sequences. We remove those sequences without submission date. This left us with 77,149,105 sequences.

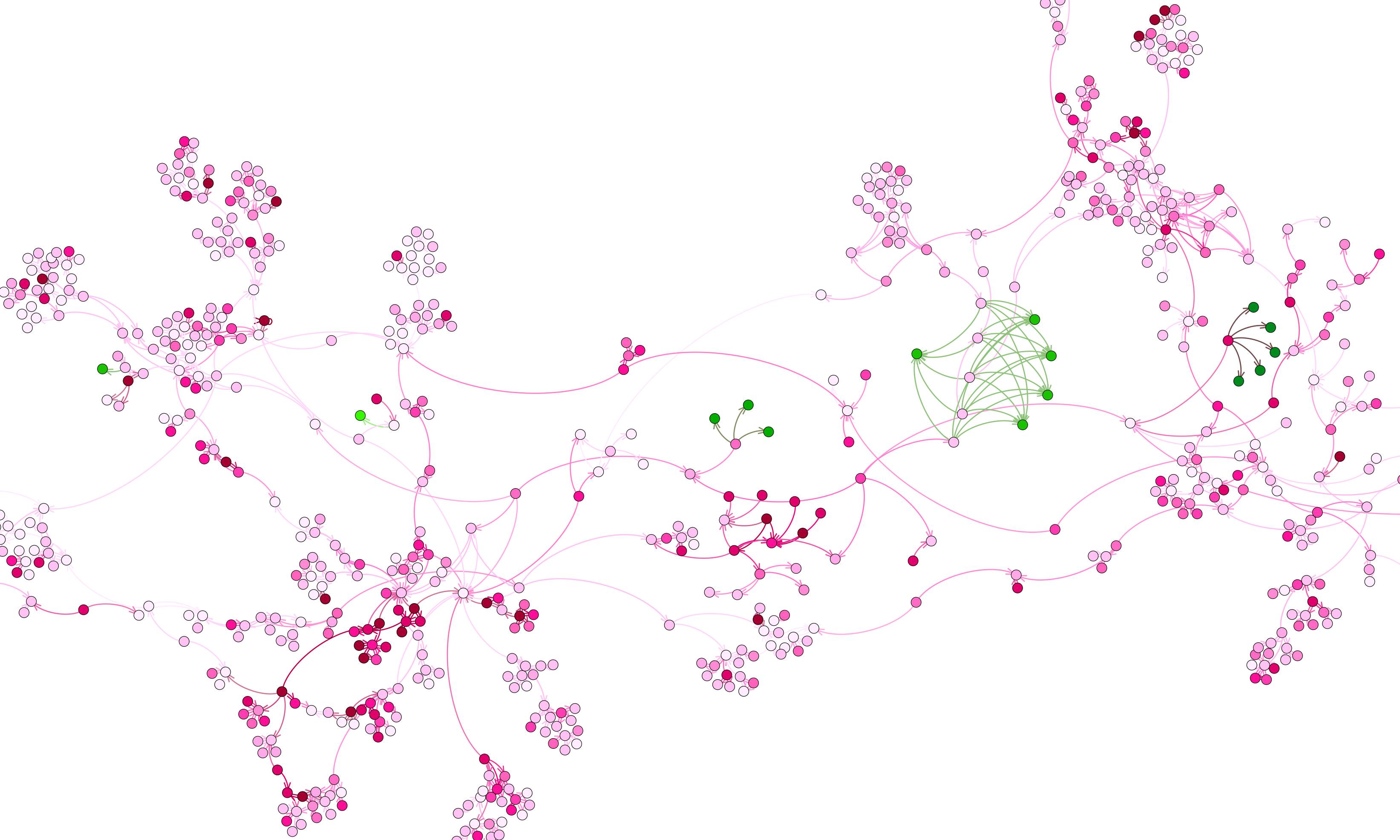

3.2 Construction of citation network

The citation networks in this paper consist of two types of nodes and two types of edges. The node is represented by the paper and the dataset and the edge is represented by the citation between two nodes. Concretely, papers cite each other to form paper-paper edges as datasets can only be cited by papers which are represented by the paper-dataset edges. As shown in the construction workflow (Fig. 2), we build the paper-paper citation network using COCI and MAG and build two separate paper-dataset edge sets using GenBank and Figshare. Then we integrate the paper-dataset edge sets into the paper-paper citation network to form two complete citation networks. The construction workflow is illustrated in Fig. 2.

Please read the full paper for details of data processing.

3.3 Visualization of citation network

3.4 Network models for scientific artifacts

3.4.1 NetworkFlow

We adapt the network model proposed in Walker et al. (2007). This method, which we call NetworkFlow here, is inspired by PageRank and addresses the issue that citation networks are always directed back in time. In this model, each vertex in the graph is a publication. The goal of this method is to propagate a vertex’s impact through its citations.

3.4.2 DataRank

In this article, we extend NetworkFlow to accommodate different kinds of nodes. The extension considers that the probability of starting at any single node should depend on whether the node is a publication or a dataset. This is, publications and datasets may vary in their relevance period. We call this new algorithm DataRank. Mathematically, we redefine the starting probability in Equation 1 of the ith node as:

\begin{equation} \rho_{i}^{\text{DataRank}}=\begin{cases} \exp\left(-\frac{\text{age}_{i}}{\tau_{\text{pub}}}\right) & \text{if } i \text{ is a publication}\\ \exp\left(-\frac{\text{age}_{i}}{\tau_{\text{dataset}}}\right) & \text{if } i \text{ is a dataset} \end{cases} \end{equation} \tag{1}

3.4.3 DataRank-FB

In DataRank, each time the walker moves, there are two options: to stop with a probability \alpha or to continue the search through the reference list with a probability 1-\alpha. However, there may exist a third choice: to continue the search through papers who cite the current paper. In other words, the researcher can move in two directions: forwards and backwards. We call this modified method DataRank-FB. In this method, one may stop with a probability \alpha-\beta, continue the search forward with a probability 1-\alpha, and backward with a probability \beta. To keep them within the unity simplex, the parameters must satisfy \alpha>0, \beta>0, and \alpha>\beta,

Then, we define another transition matrix from the citation network as follows M_{ij}=\begin{cases} \frac{1}{k_{j}^{\text{in}}} & \text{if } j \text{ cited by } i \\ 0 & \text{o.w.} \end{cases} \tag{2}

where k_{j}^{\text{in}} is the number of papers that cite j.

We update the average path length to all papers in the network starting from \rho as

\begin{equation} T=I\cdot\rho+(1-\alpha)W\cdot\rho+\beta M\cdot\rho+(1-\alpha)^{2}W^{2}\rho+\beta^{2}M^{2}\rho+\cdots \end{equation} \tag{3}

4 Results

We aim at finding whether the estimation of the rank of a dataset based on citation data is related to a real-world measure of relevance such as page views or downloads. We propose a method for estimating rankings that we call DataRank, which considers differences in citation dynamics for publications and datasets. We also propose some variants to this method, and compare all of them to standard ranking algorithms. We use the data of visits of GenBank datasets and downloads of Figshare datasets as measure of real usage. Thus, we will investigate which of the methods work best for ranking them.

Please read the full paper for details.

5 Discussion

The goal of this article is to better evaluate the importance of datasets through article citation network analysis. Compared with the mature citation mechanisms of articles, referencing datasets is still in its infancy. Acknowledging the long time the practice of citing datasets will take to be adopted, our research aims at recovering the true importance of datasets even if their citations are biased compared to publications.

Scholars disagree on how to give credit to research outcomes. Regardless of how disputed citations are as a measure of credit, they complement other measures that are harder to quantify such as peer review assessment or real usage such as downloads. Citations, however, are rarely used for datasets (Altman & Crosas, 2013), giving these important research outcomes less credit that they might deserve. Our proposal aims at solving some of these issues by constructing a network flow that is able to successfully predict real usage better than other methods. While citations are not a perfect measure of credit, having a method that can at least attempt to predict usage is advantageous.

Previous research has examined ways of normalizing citations by time, field, and quality. This relates to our DataRank algorithm in that we are trying to normalize the citations by year and artifact type.

We find it useful to interpret the effect of different decay times on the performance of DataRank.

6 Conclusion

Understanding how datasets are used is an important topic in science. Datasets are becoming crucial for the reproduction of results and the acceleration of scientific discoveries. Scientists, however, tend to dismiss citing datasets and therefore there is no proper measurement of how impactful datasets are. Our method uses the publication-publication citation network to propagate the impact to the publication-dataset citation network. Using two databases of real dataset networks, we demonstrate how our method is able to predict actual usage more accurately than other methods. Our results suggest that datasets have different citation dynamics to those of publications. In sum, our study provides a prescriptive model to understand how citations interact in publication and dataset citation networks and gives a concrete method for producing ranks that are predictive of actual usage.

Our study advances an investigation of datasets and publications with novel ideas from network analysis. Our work puts together concepts from other popular network flow estimation algorithms such as PageRank (Brin & Page, 1998) and NetworkFlow (Walker et al., 2007). While scientists might potentially take a great amount of time to change their cita- tion behavior, we could use techniques such as the one put forth in this article to accelerate the credit assignment for datasets. Ultimately, the need for tracking datasets will only become more pressing and therefore we must adapt or miss the opportunity to make datasets first-class citizens of science.

Citation

@article{zeng2020,

author = {Zeng, Tong and Wu, Longfeng and Bratt, Sarah and E Acuna,

Daniel},

title = {Assigning Credit to Scientific Datasets Using Article

Citation Networks},

journal = {Journal of Informetrics},

volume = {14},

number = {2},

pages = {101013},

date = {2020-05},

url = {https://www.sciencedirect.com/science/article/pii/S1751157719301841},

doi = {10.1016/j.joi.2020.101013},

issn = {1751-1577},

langid = {en},

abstract = {A citation is a well-established mechanism for connecting

scientific artifacts. Citation networks are used by citation analysis

for a variety of reasons, prominently to give credit to scientists’

work. However, because of current citation practices, scientists

tend to cite only publications, leaving out other types of artifacts

such as datasets. Datasets then do not get appropriate credit even

though they are increasingly reused and experimented with. We

develop a network flow measure, called DataRank, aimed at solving

this gap. DataRank assigns a relative value to each node in the

network based on how citations flow through the graph,

differentiating publication and dataset flow rates. We evaluate the

quality of DataRank by estimating its accuracy at predicting the

usage of real datasets: web visits to GenBank and downloads of

Figshare datasets. We show that DataRank is better at predicting

this usage compared to alternatives while offering additional

interpretable outcomes. We discuss improvements to citation behavior

and algorithms to properly track and assign credit to datasets.}

}